What is Claude AI?

Claude AI is Anthropic’s family of large language models (LLMs). It was created to be more than just another chatbot. From the start, Anthropic designed Claude to be helpful, harmless, and honest. These three principles are repeated across official materials and set Claude apart in the AI landscape.

Claude AI works as a digital assistant that can analyze text, generate content, code, and even understand images. The difference lies in how it is trained and governed.

Anthropic introduced a framework called Constitutional AI, which encodes rules of conduct directly into the model. This approach reduces harmful outputs and makes the assistant more steerable for enterprises and individuals.

Today, Claude AI is available in multiple versions (Haiku, Sonnet, Opus) to balance speed, cost, and intelligence. It is distributed via the Claude website, API, and enterprise channels such as Amazon Bedrock and Google Cloud Vertex AI, making it accessible for both consumers and organizations.

🔍 Curious about Claude Research?

It’s our in-depth exploration of Anthropic’s AI models, their safety design, and real-world adoption.

Get the Free Claude Research Pack — a starter resource to understand Claude AI’s origins, principles, and practical uses.

Who created Claude AI?

Claude AI was created by Anthropic, an AI safety and research company founded in 2021 by former OpenAI executives. The founders include Dario Amodei, Daniela Amodei, Jared Kaplan, Jack Clark, and Ben Mann. Anthropic is a Public Benefit Corporation (PBC), meaning it is legally required to consider the public good alongside profit.

This corporate structure is reinforced by the Long-Term Benefit Trust, which holds governance power to ensure that Anthropic’s mission of safe AI development remains central, even under commercial pressure.

Origins and Purpose

Anthropic’s mission is to build AI that benefits society in the long run. Claude AI reflects this mission through two main commitments:

-

Safety-first research: making models reliable, interpretable, and aligned with human values.

-

Practical deployment: delivering Claude as a useful assistant while preserving strict safety guardrails.

This purpose also explains Claude’s design choices. For example, Anthropic delayed the public release of early versions in 2022 to address safety concerns, even when that meant losing publicity against competitors like ChatGPT.

In summary, Claude AI is not only a powerful assistant. It is a product born from a safety-driven philosophy, a governance model designed for accountability, and a mission to deliver AI that is both useful and socially responsible.

Learn more on Anthropic Claude official page for features, availability, and product updates. You can also read “What is Claude?” article in Anthropic’s Help Center for a concise definition.

Evolution of Claude AI (2023–2025)

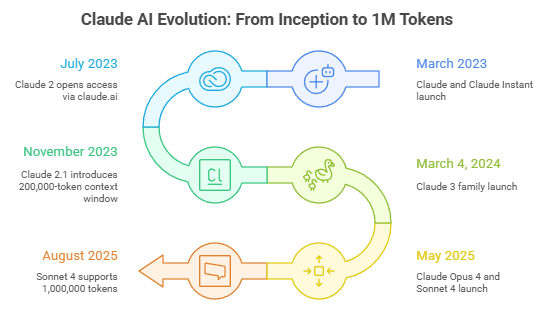

Claude AI has progressed through clear generations. Each step increased context length, added reasoning controls, and tightened safety. This timeline shows how Claude moved from a steerable assistant to a long-context, enterprise-ready platform sss

Claude 1 and Claude Instant (2023)

Claude first appeared in March 2023 with two variants: Claude and Claude Instant. The goal was a steerable assistant that adapts tone and behavior while staying helpful, harmless, and honest. From day one, Anthropic emphasized safety over speed. Reports in the document note that earlier releases were even delayed in 2022 to resolve safety concerns, trading publicity for caution.

Instant evolved in parallel and inherited improvements from the main line, signaling a product strategy: even the “small” model must be useful, not merely cheap. This early posture—steerability + restraint—set the template for later families and explains why Claude’s roadmap talks about governance as much as raw capability.

Claude 2 and Claude 2.1 (2023)

In July 2023, Claude 2 opened access via claude.ai and the API, moving beyond limited trials. The pivotal update came in November 2023 with Claude 2.1 and its 200,000-token context window (about 500 pages). That shift enabled whole-document and multi-file workflows without aggressive chunking, especially for codebases and legal briefs.

Anthropic paired long context with guidance: anchor quotes, structure spans, and ask for citations to reduce “lost-in-the-middle” errors. The doc also stresses that Anthropic does not publish parameter counts, so comparisons should focus on capabilities (context, tool use, reasoning) rather than rumored sizes. 2.1 also targeted lower hallucinations, framing long-context reliability as a usage discipline, not just a bigger buffer.

The Claude 3 Family (2024)

On March 4, 2024, Anthropic introduced a three-tier family—Haiku (speed/cost), Sonnet (balanced), Opus (top capability). This let teams tune latency and price against intelligence for production use. Vision input and stronger coding rounded out the assistant role. Critically, distribution expanded through Amazon Bedrock and Google Cloud Vertex AI, bringing SSO, logging, quotas, and regional controls that enterprises require.

The document notes European expansion (May 2024) for web and iOS, plus a Team plan—evidence that Claude wasn’t only an API, but a product suite aimed at organizations. Long context (up to 200k on supported SKUs) remained a signature, now paired with clearer enterprise surfaces and governance artifacts (system cards, policy pages) to make deployments auditable.

Claude 4 and the 1M-token Era (2025)

In May 2025, Anthropic launched Claude Opus 4 and Claude Sonnet 4 under the Responsible Scaling Policy. Opus 4 shipped at ASL-3, hardening misuse defenses and model-weight security; Sonnet 4 ran at ASL-2. A headline feature was extended thinking: developers allocate a token budget for step-by-step reasoning before the final answer, trading latency/cost for depth on hard tasks.

Then in August 2025, Sonnet 4 added support for 1,000,000 tokens of context across Anthropic’s API (public beta) and partner clouds. That fivefold jump enabled single-pass reads of whole repositories and multi-paper packets, reducing the need for complex chunking. The document cautions that long context still demands good prompt structure and verification; bigger windows amplify good practices—and mistakes.

Deprecations and Lifecycle Management

Anthropic actively retires models to keep customers current. The document highlights the July 21, 2025 retirement of Claude 2, 2.1, and Sonnet 3, with advance notice and recommended upgrade paths (e.g., to the 4-series). This lifecycle discipline matters for API stability, safety posture, and policy alignment. Teams are urged to track deprecation pages and pin model IDs to avoid surprises when endpoints change

Technical Architecture of Claude AI

Claude AI follows a pragmatic design: publish what matters for safe deployment (capabilities, controls, governance) and avoid leaking internals that Anthropic doesn’t disclose. For SEO clarity, this section explains how Claude works in practice—the model family, training stack, data sources, long-context mechanics, and the agentic interfaces teams actually use.

Core model family (Transformer lineage)

Anthropic describes Claude as a Transformer-based LLM line. Parameter counts and fine architectural details are not public, so comparisons should focus on capabilities (context length, reasoning controls, tool interfaces) instead of rumored sizes. This posture is consistent across Claude 2 → 4 and is also noted by Stanford’s Transparency Index as common in the sector.

Training stack (Constitutional AI + RLAIF)

On top of large-scale pretraining, Anthropic applies Constitutional AI (CAI): the model self-critiques using an explicit “constitution” of principles, then learns preferences with Reinforcement Learning from AI Feedback (RLAIF). The method reduces harmful outputs while keeping the assistant engaged; Anthropic later ties deployment to governance gates under the Responsible Scaling Policy (RSP).

Data sources and collection practices

Claude’s training mix includes public web (robots.txt respected), licensed non-public datasets, and consented user data (opt-in), with standard filtering and de-duplication. Enterprise/API usage is contractually separated from consumer products, which in 2025 shifted to explicit opt-in/opt-out. Independent reporting also documented practical frictions around the “ClaudeBot” crawler—robots signals were honored once configured.

Long context (200k → 1M tokens)

Long context is a defining trait. Claude 2.1 introduced 200k tokens plus guidance to avoid “lost-in-the-middle” errors with structured prompting. In Aug 2025, Sonnet 4 gained up to 1,000,000 tokens (API, later Vertex/Bedrock), enabling whole-repo or multi-paper analysis in one pass—though Anthropic still recommends disciplined prompting and evaluation.

Reasoning controls (Extended thinking)

From Claude 3.7 and formalized in Claude 4, developers can enable extended thinking: set a token budget for internal reasoning traces before the final answer. It’s an inference-time control—not a different base model—that trades latency/cost for deeper step-by-step reasoning and auditable traces on hard tasks.

Claude AI Extended Thinking: How Token Budgets Affect Results

When you enable extended thinking in Claude AI, you allocate a private reasoning budget before the final answer. This improves depth on hard tasks, with latency and cost trade-offs.

Tip: set different budgets per route (e.g., “light”, “standard”, “deep-dive”) and log budget vs. outcome to tune Claude AI quality.

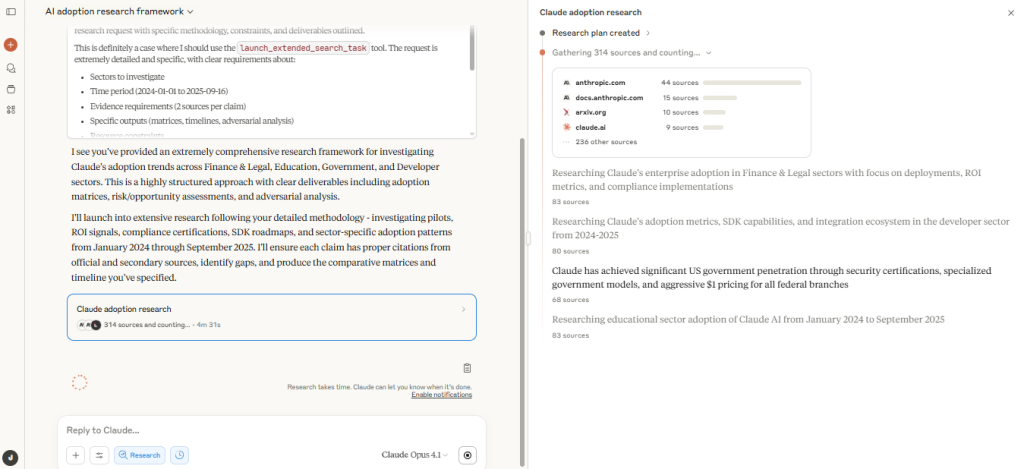

Tool use and agentic surfaces

Claude’s Messages API supports tool use via JSON-schema functions; the model decides when/how to call them and your app executes results. Two higher-leverage betas expand capability and risk surface:

-

Computer Use: desktop vision + mouse/keyboard actions for RPA-like flows (requires tight guardrails).

-

Code Execution: LLM-local Bash + file I/O sandbox for analysis and coding.

Anthropic evaluates these agentic features in the Claude 4 System Card when assigning ASL levels.

Distribution channels (API, Bedrock, Vertex, MCP)

Claude ships on Anthropic’s API and enterprise clouds—Amazon Bedrock and Google Cloud Vertex AI—providing SSO, logging, quotas, and regional controls. The 1M-token expansion rolled out across these channels in Aug 2025. Anthropic also backs the Model Context Protocol (MCP) to connect assistants to tools/data in an auditable, standardized way. Channel choice affects quotas, latency, observability, and when you can exploit 1M context.

Where to Run Claude AI: API, Bedrock, or Vertex?

Choose the channel that fits your organization’s controls. Claude AI offers consistent capabilities with different operational envelopes.

Start where identity and logging are already standardized. You can mix channels while keeping Claude AI prompts and patterns consistent.

What’s deliberately undisclosed—and how to evaluate

Anthropic does not publish parameter counts, MoE layouts, or full corpus composition. Rigorous teams therefore benchmark Claude on workload-level outcomes (long-context tasks, sensitivity to thinking budgets, tool-chain reliability) and treat documented controls—context window, extended thinking, ASL/RSP—as first-class knobs.

Architecturally, think of Claude AI as a Transformer family operationalized through capability controls (context up to 1M, extended thinking), agentic interfaces (tool use, Computer Use, Code Execution), and governed deployment (RSP/ASL on API, Bedrock, Vertex). That combination—not raw parameter counts—explains why Anthropic Claude competes strongly in enterprise-grade scenarios.

Key Features and Capabilities

Key features

Claude AI: Key Features and Capabilities

Claude AI combines very long context, tunable reasoning, multimodal understanding, and safe tool use—delivered through enterprise-ready channels.

Long-context (200k → up to 1M tokens)

Single-pass reads of repos or multi-paper packets. Powerful, not magic—use anchors and verification.

Use it when: whole-repo or long-document synthesis.

Extended reasoning (thinking token budgets)

Allocate a private “thinking” budget before the final answer to deepen analysis (latency/cost ↑).

Use it when: complex planning, math, audits.

Multimodal inputs (text + vision)

Images via the Messages API; Claude 4 offers “fast” vs. “extended” modes alongside stronger coding.

Use it when: screenshots, diagrams, or visual context matter.

Tool use & agentic surfaces

JSON-schema tools, Code Execution (sandbox) and Computer Use (desktop control). Treat as privileged.

Use it when: orchestrating actions with allow-lists, approvals, and logging.

Enterprise distribution (API, Bedrock, Vertex)

Run Claude AI on Anthropic API or partner clouds for SSO, quotas, logging, and regional controls.

Use it when: you need compliance-aligned deployments.

Performance signals & safety

Treat leaderboards as signals; validate on your workload. Govern under RSP/ASL with runtime I/O guards.

Use it when: evaluating models or planning safe rollouts.

Long-context understanding (200k → 1M tokens)

Claude moved from a 200,000-token window in 2.1 to up to 1,000,000 tokens with Sonnet 4 (Aug 2025), enabling single-pass reads of whole repos or multi-paper packets. See: 1M-context announcement.

It’s powerful, but not magic—prompt structure and verification remain essential for reliable retrieval.

Extended reasoning (thinking token budgets)

From Claude 3.7 and formalized in Claude 4, you can allocate a budget of “thinking” tokens before the final answer (inference-time control). See safety/governance details in the Claude 4 System Card.

Multimodal inputs (text + vision)

Since the Claude 3 line, models accept images via the Messages API; Claude 4 adds “fast vs. extended” modes and stronger coding alongside vision.

Tool use & agentic surfaces

Declare JSON-schema tools; Claude decides whether/how to call them, and your app executes the call:

Messages API (tools). High-leverage betas expand capability— Computer Use (desktop control) and Code Execution (LLM-local Bash + file I/O). Treat these as privileged: they add power and risk (prompt-injection, action blast radius).

Enterprise distribution (API, Bedrock, Vertex)

Claude ships on the Anthropic API and partner clouds:

Amazon Bedrock and Google Cloud Vertex AI. The 1M-token expansion also rolled out on these channels, changing your operational envelope (latency, observability, limits).

Performance signals (how to read them)

On SWE-bench Verified, mid-2025 third-party runs report strong results for Opus 4/Sonnet 4 under specific scaffolds. Use leaderboards and public evals as signals, not absolute truth: SWE-bench.

Safety & governance

Claude 4 invoked the Responsible Scaling Policy with ASL-3 for Opus 4 and ASL-2 for Sonnet 4; runtime alignment adds I/O guards for narrow, high-risk content. Source: Claude 4 System Card.

Data handling & privacy

Consumer apps now prompt users to opt-in/opt-out of training (with extended retention if opted-in), while enterprise/API traffic is excluded. See current policy notes: Data usage.

Reliability patterns for long documents

Long windows can suffer “lost in the middle”; Anthropic guidance recommends quoting, section anchors, and explicit citations to mitigate—apply disciplined prompting and validation.

Bottom line: Claude’s edge is the combo of very long context, tunable reasoning, tool/agent surfaces, and enterprise channels—used with disciplined prompting, scoped permissions, and channel-aware deployment.

Claude AI Pricing and Plans

Free Plan

Claude AI offers a free plan that allows individuals to test the assistant with limited daily usage.

-

Access to core models (Haiku or Sonnet).

-

Hard limits on requests and tokens.

-

Not suitable for enterprise or sensitive data.

This plan is ideal for students, hobbyists, or anyone who wants to explore Claude AI at no cost.

Pro Plan

The Pro plan costs $20/month (as of Sept 2025).

-

Includes higher usage quotas.

-

Faster responses and priority during peak times.

-

Access to advanced models like Claude 3 Opus.

Recommended for professionals, writers, and developers who need reliable access beyond free limits.

Team Plan

Claude for Work (Team) is priced at $30/user/month (monthly) or $25/user/month (annual).

-

Collaboration features with shared workspaces.

-

Admin tools for policy and billing.

-

Default exclusion from training data.

This plan fits small teams and startups scaling AI use.

Enterprise Plan

Enterprise pricing is customized.

-

Includes SLAs, dedicated support, and integrations.

-

Available on Anthropic API, AWS Bedrock, and Google Cloud Vertex AI.

-

Training exclusion and compliance by default.

Recommended for large organizations, governments, or regulated industries.

Claude AI Pricing and Plans

Key takeaway: Free is for light exploration, Pro unlocks reliability, Team enables collaboration, and Enterprise ensures compliance. Choose the Claude AI plan that matches your scale.

Pro vs Free

The key difference between Pro vs Free is reliability and scale. Free users face strict daily limits, while Pro subscribers get priority access, faster performance, and support for advanced models.

🚀 Ready to go beyond just using Claude AI?

Claude Research LITE is our advanced playbook: 25+ expert prompts, a preflight checklist, and a full flight manual to unlock Claude’s real power.

Perfect for professionals and teams who want to maximize ROI and scale Claude safely in daily work.

Risk Governance and Security in Claude AI Deployments

Scope and Rationale

This chapter consolidates documented risks and governance practices for deploying Claude AI at scale. The focus is on enterprise-ready safeguards: tool scopes, audit trails, prompt-injection defenses, Computer Use limitations, and regulatory compliance. All claims are grounded in Anthropic’s official documentation, system cards, and independent reporting .

Core Governance Principles

Claude AI Governance: Responsible Scaling at a Glance

Claude AI is deployed under a responsible scaling framework. Use this quick reference to align internal controls with model safety levels.

Principles

- Helpful, harmless, honest

- Constitutional AI + preference learning

- Defense-in-depth for agentic features

ASL Gates (Example)

- ASL-2: expanded evaluations, misuse safeguards

- ASL-3: hardened security controls & reviews

- Escalation: board/oversight consultation

Operational Hooks

- Model lifecycle tracking (deprecations)

- Change management & red-team reviews

- Audit trails for tool calls & decisions

Map internal risk tiers to ASL gates and require approvals for higher-risk Claude AI capabilities (e.g., Computer Use).

Tool scopes and audit trails

-

MCP integrations simplify connecting Claude to business systems but increase exposure.

-

Best practice: apply least-privilege scopes (only grant the minimum permissions needed), log all tool invocations, and audit which users or servers are connected .

Prompt-injection and indirect inputs

-

Claude agents can be manipulated via external content (web pages, support tickets).

-

Risky actions (e.g., repository writes, billing changes) must be gated by human approval.

-

Use sandboxed contexts for Computer Use, isolating risky flows from production .

Extended reasoning & cost controls

-

Long contexts (200k–1M tokens) and extended thinking improve accuracy but inflate costs and latency.

-

Enterprises should leverage token budgeting tools (e.g., Vertex count-tokens) and SDK-level controls to right-size prompts and reasoning budgets .

Claude AI Tool Use: Safer Function Calls

When connecting Claude AI to tools (APIs, databases), define strict JSON-schema interfaces and enforce least-privilege scopes.

Design Checklist

- Minimal function surface (inputs validated and typed)

- Hard limits: pagination, max rows, allowed domains

- Human approval gates for risky actions (writes/billing)

- Strict output format: model returns intent + params

- Comprehensive logging for audits & alerting

Risk → Mitigation

| Risk | Mitigation |

|---|---|

| Prompt injection | Sanitize inputs, restrict tools, require approvals |

| Data exfiltration | DLP rules, domain allow-lists, row caps |

| Runaway costs | Token budgets, retry caps, circuit breakers |

Require Claude AI to return a safe-plan (intent, inputs, expected effects) before tool execution.

Risk Categories

| Risk Type | Example Trigger | Governance Mitigation |

|---|---|---|

| Data leakage | Unrestricted tool access | OAuth, project-level permissions, retention rules |

| Prompt injection | Malicious inputs in tickets/emails | Human approval for high-risk actions |

| Cost overruns | 1M-token contexts or excessive retries | Token budgets, count-tokens, monitoring |

| System drift | Silent deprecations of Claude models | Subscribe to model lifecycle & deprecation feeds |

| Governance failures | Unlogged connectors or shadow tools | Centralized auth/logging; Cloudflare MCP hosting |

Compliance and Regulatory Readiness

-

Enterprise distribution channels (Bedrock, Vertex) matter more than raw model capability. They enforce retention, logging, and regional controls aligned with compliance regimes like GDPR, FedRAMP, or SOC-2 .

-

Sector-specific adaptations:

-

Finance: full-transaction auditing (Brex case).

-

Education: privacy-preserving analytics at state/district level (Panorama case).

-

Public sector: audit-friendly procurement and FedRAMP High availability.

-

Practical Adoption Playbook

-

Pick the right channel first: API/Bedrock/Vertex over consumer apps for enterprise control.

-

Instrument for governance: gate risky features (Computer Use, repo writes) with approvals .

-

Track lifecycle notices: avoid drift by pinning model IDs and rehearsing upgrades .

-

Mirror ASL governance: replicate Anthropic’s AI Safety Level thresholds inside your org.

-

Publish & monitor controls: use robots.txt and explicit opt-outs for content hosting.

Claude AI deployments succeed when governance is as deliberate as capability selection. Tool scopes, prompt hygiene, and channel choice (Bedrock/Vertex/API) determine whether Claude’s advanced context and reasoning are an asset or a liability. Organizations must move beyond “AI inside chat” toward embedded, auditable, and policy-aligned AI operations .

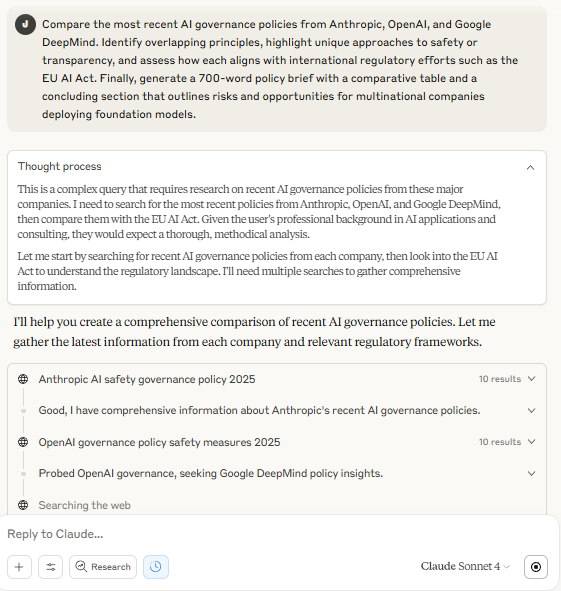

Governance and Transparency of Claude AI

Scope

This chapter reviews Anthropic’s governance structures—both corporate and technical—alongside the transparency artifacts it publishes, policy changes through 2024–2025, and independent evaluations/criticisms. All dates are anchored as of September 14, 2025. The analysis is grounded in Anthropic’s official documents and reputable third-party sources.

Corporate Governance: PBC + Long-Term Benefit Trust

Anthropic is structured as a Public Benefit Corporation (PBC). In 2023, it created a Long-Term Benefit Trust (LTBT), designed to embed public-interest oversight into corporate decisions. Over time, the LTBT is empowered to elect a majority of board seats, aligning the company’s strategic direction with long-term societal benefit, not just shareholder returns. Independent legal commentary highlights this as a unique governance innovation among AI labs .

Technical Governance: Responsible Scaling Policy (RSP) and ASL Gates

Anthropic’s Responsible Scaling Policy (RSP) links model deployment to AI Safety Levels (ASL). Each ASL defines required safeguards before release. For example, in May 2025, Anthropic launched Claude Opus 4 under ASL-3 and Sonnet 4 under ASL-2, citing precautionary risk evaluations in areas like cybersecurity and autonomy. Importantly, the RSP allows activation of a higher ASL even without formal threshold breaches, if risks are near the line .

The current RSP v2.x specifies ASL-3 deployment/security standards (e.g., resilience against persistent misuse, model weight protection from theft). Board approval and LTBT consultation are mandatory for escalation decisions, ensuring technical governance is tied back to corporate oversight .

Transparency Artifacts Published by Anthropic

-

System Cards: Detailed evaluations, alignment methods, and mitigation strategies (e.g., Claude 4 System Card, May 2025).

-

Policy Updates: August 2025 revisions to the Usage Policy clarified prohibitions on CBRN misuse and cybersecurity abuse. Guidance was added for safe use of agentic features like Computer Use.

-

Deprecation Notices: Example — Claude 2, 2.1, and Sonnet 3 retired July 21, 2025 (announced Jan 21, 2025).

-

Data Handling Docs: Clear documentation of zero-data retention for APIs and enterprise plans, with consumer opt-in/out options separated .

External Evaluations and Criticisms

Foundation Model Transparency Index (FMTI).

Independent reviews report persistent opacity on parameters, compute budgets and corpus composition across most labs — Anthropic is no exception. See the May 2024 FMTI materials: Stanford FMTI.

Future of Life Institute (FLI) — AI Safety Index (Summer 2025).

FLI graded Anthropic C+ (best among peers), while many labs scored D or worse on existential-risk planning. Sources: FLI AI Safety Index, The Guardian coverage, TIME summary.

SaferAI (Oct 2024) — critique of Anthropic’s RSP.

SaferAI argued the Responsible Scaling Policy update became “less precise,” reducing auditability. Read the critique and the official policy: SaferAI analysis and Anthropic RSP (PDF).

Media reporting (2024–2025) — ClaudeBot crawler.

Multiple outlets covered heavy crawler load and transparency debates; Anthropic documents how to block its bots via robots.txt. See: The Verge report and Anthropic support: how to block ClaudeBot.

Practical Implications for Adopters

-

Use Enterprise Channels: API, Bedrock, or Vertex provide predictable retention and training exclusions.

-

Mirror ASL Logic: Internally define thresholds (e.g., when enabling Computer Use) to trigger tighter review or rollback.

-

Track Deprecations: Pin model IDs and monitor release feeds to avoid governance drift.

-

Publisher Controls: Implement robots.txt and audit Claude-related traffic if hosting sensitive content .

Anthropic’s governance framework blends corporate design (PBC + LTBT) with technical safeguards (RSP + ASL). Its transparency program includes system cards, usage-policy updates, lifecycle notices, and data-handling documentation. Yet, fundamental gaps remain: opacity on model internals, debated sufficiency of the RSP, and the operational burden of crawler management. For rigorous adopters, the evidence-based path is layered defense, enterprise deployment channels, and continuous alignment of governance registers to evolving policies .

Limitations and Challenges of Claude AI

Claude AI is powerful, but the research file highlights clear limitations and open challenges. Understanding these is crucial for enterprises, researchers, and developers evaluating deployment risks.

Hallucinations and Reliability

- Claude, like all LLMs, still produces hallucinations—confident but false statements.

- Long-context windows (200k–1M tokens) improve breadth but introduce “lost in the middle” effects, where information buried deep in a document is ignored or misrepresented.

- Independent audits note these weaknesses remain, even as Anthropic recommends quoting and section anchoring to mitigate.

Cost and Latency Trade-offs

- Extended thinking (allocating token budgets for internal reasoning) raises latency and cost.

- 1M-token contexts are resource-heavy; poorly designed prompts can inflate usage without improving accuracy.

- Enterprises must apply budgeting tools, logging, and monitoring to keep deployments efficient.

Risks of Agentic Features

- Computer Use (desktop automation) and Code Execution (local sandbox) create new attack surfaces.

- Without strict guardrails, models can be manipulated through malicious inputs, leading to unwanted system actions.

- Governance practices—least-privilege access, human approvals for high-risk actions, and logging—are required.

Transparency Gaps

- Anthropic does not disclose parameter counts, compute budgets, or full dataset composition.

- The Foundation Model Transparency Index (FMTI) identifies this opacity as a recurring sector-wide issue.

- SaferAI and FLI reports note that while Anthropic publishes system cards and safety policies, existential risk planning is graded poorly (D or worse industry-wide).

Deprecations and Lifecycle Friction

- Claude 2, 2.1, and Sonnet 3 were retired in July 2025.

- Such lifecycle changes require users to pin model IDs, monitor announcements, and refactor pipelines.

- Teams that fail to track deprecations risk sudden capability loss in production systems.

Public Criticisms and Adoption Risks

- Media reported ClaudeBot’s crawler load as disruptive, raising questions on operational transparency.

- NGOs (SaferAI, FLI) argue Anthropic’s governance documents are too high-level to be fully auditable.

- Users in regulated sectors must independently validate compliance rather than relying solely on vendor claims.

Comparison Table — Limitations vs. Mitigations

| Limitation | Impact | Mitigation Strategy |

|---|---|---|

| Hallucinations | Inaccurate or false outputs | Use citations, anchor prompts, human review |

| Lost in the middle | Missed info in long contexts | Structure docs, use anchors & retrieval aids |

| High cost of long contexts | Budget overruns, latency spikes | Token budgeting, monitoring, batch API |

| Agentic feature risks | Unintended system actions | Guardrails, least privilege, approvals |

| Transparency gaps | Limited auditability | Internal benchmarks, cross-model evaluation |

| Deprecation cycles | Pipeline instability | Pin IDs, track lifecycle announcements |

Claude AI’s limitations are not deal-breakers, but they require disciplined governance. Hallucinations, cost spikes, and transparency gaps mean enterprises must combine technical mitigations (prompt design, token budgets) with operational controls (approvals, audits, lifecycle tracking). Responsible adoption demands treating these challenges as ongoing risk factors, not solved problems.

Future Outlook and Roadmap for Claude AI

Claude AI has advanced rapidly between 2023 and 2025. The research materials outline not just what has been released, but where Anthropic signals the product is headed. This chapter reviews roadmap themes, expected innovations, and open questions about future governance.

Scaling Context and Reasoning

-

From 200k tokens in Claude 2.1 to 1M tokens in Sonnet 4 (Aug 2025), Anthropic has consistently pushed long-context leadership.

-

The roadmap suggests further stability improvements for ultra-long contexts, addressing “lost in the middle” through new retrieval and anchoring strategies.

-

Expect refinements in extended thinking budgets: more transparent traces and better cost–latency trade-offs.

Making Long Context Work with Claude AI

Long windows in Claude AI enable whole-repo or multi-document analysis, but reliability hinges on prompt structure and verification.

Anchor & Quote

Quote exact lines/sections (“anchor quotes”) and ask for in-text citations to reduce “lost-in-the-middle”.

Section Prompts

Break tasks: per-section extraction → synthesis → final audit. Keep instructions local to each section.

Verification

Require a “claims list” with source spans (file, line/heading) and re-ask for missing citations.

Expansion of Agentic Capabilities

-

Current betas (Computer Use, Code Execution) show a move toward more autonomous task handling.

-

Roadmap items include stronger sandboxing, human-in-the-loop controls, and enterprise auditing features.

-

If matured safely, these features could shift Claude from “assistant” to general-purpose digital co-worker.

Integration into Enterprise Channels

-

Anthropic continues to prioritize AWS Bedrock and Google Vertex AI.

-

Roadmap indicates broader regional availability, improved observability (SSO, logging, quotas), and compliance features (FedRAMP, GDPR, SOC).

-

Expect deeper alignment with the Model Context Protocol (MCP), standardizing how Claude connects with tools and data.

Governance and Responsible Scaling

-

The Responsible Scaling Policy (RSP) and AI Safety Levels (ASL) will remain central.

-

Future updates are expected to clarify thresholds for agentic autonomy and cybersecurity misuse.

-

Independent watchdogs (SaferAI, FLI) press for greater transparency—whether Anthropic responds with more granular disclosures (parameters, training compute) remains an open question.

Anticipated Adoption Trends

Finance & Legal

- Brex: deeper product integration using Claude on Amazon Bedrock for spend management. Case study.

- Harvey (legaltech): platform-wide use of Claude for contracts and litigation; multi-model strategy. Customer story.

Education

- State/university procurement: launch of Claude for Education in AWS Marketplace (streamlined billing & privacy-first deployments). AWS Public Sector blog.

Government

- Security authorizations: FedRAMP High & DoD IL4/IL5 availability for Claude via AWS GovCloud → beyond pilot programs. Claude for Government , AWS GovCloud announcement.

Developers

- Budgeting & cost controls: Usage & Cost API for programmatic spend/usage analytics.

Antrhopic Docs, Usage Cost API· - Logging/observability: native integrations (e.g., Honeycomb) for token/cost attribution and OpenTelemetry. Honeycomb integration

Comparison Table — Roadmap Themes

| Roadmap Area | Current State (2025) | Anticipated Next Step |

|---|---|---|

| Context length | 1M tokens (Sonnet 4) | Greater stability & retrieval support |

| Extended reasoning | Token budgets, manual tuning | Smarter auto-scaling of reasoning depth |

| Agentic features | Computer Use & Code Execution (beta) | Hardened sandboxes, enterprise controls |

| Enterprise integration | Bedrock, Vertex AI | Wider regions, stronger observability |

| Governance | RSP v2.x, ASL 2–3 deployments | Clearer thresholds, transparency gains |

The Claude AI roadmap signals continued focus on long context, safer autonomy, and enterprise-grade governance. Anthropic’s challenge is to balance innovation (agentic tools, extended reasoning) with the transparency and controls demanded by enterprises and regulators. The next two years will determine whether Claude cements its role not just as a leading assistant, but as a trusted digital collaborator across industries.

How to Start Using Claude AI

Getting started with Claude AI is straightforward. Follow these simple steps to unlock its potential:

- Create your free account and sign in.

- Define a clear goal (e.g., draft a summary, generate code, analyze text).

- Provide context (document, dataset, or instructions).

- Ask Claude AI to generate multiple versions and compare results.

- Review outputs, refine prompts, and validate against your needs.

“Act as a teaching assistant. Using Claude AI, create a 45-minute lesson plan on climate change for high school students. Include objectives, activities, and a quick assessment.”

FAQ

Q1: Is Claude AI free?

Yes. Claude AI offers a Free plan with limited daily usage (for individuals). See the official plan table: Choosing a Claude Plan.

Q2: How much does Claude AI Pro cost?

As of September 2025, Claude Pro is $20/month in the U.S. (regional prices may vary). Sources: Claude Pro announcement and the current plan table: Plans & pricing table.

Q3: What is the difference between Claude Pro and Free?

Free users face strict daily limits and may see slower access at peak times. Pro provides higher quotas, priority access, and access to advanced models (varies by plan). Compare here: Choosing a Claude Plan.

Q4: Does Anthropic offer enterprise pricing?

Yes. For organizations, Claude for Work offers Team and Enterprise plans.

Team pricing: $25/user/month (annual) or $30/user/month (monthly), min. 5 seats; Premium seats at $150/user/month. Details: Team plan billing and announcement: Team plan ($30/mo) launch. Enterprise is custom-priced.

Q5: Does Claude AI use my data for training?

For consumer plans (Free, Pro, Max): starting Aug 28, 2025, you can opt in or out of training. If opted in, chats may be retained up to 5 years; if not, 30 days. For commercial (Team, Enterprise, API), training is excluded by default (with configurable retention and zero-retention options). See: Data usage & retention.