Introduction

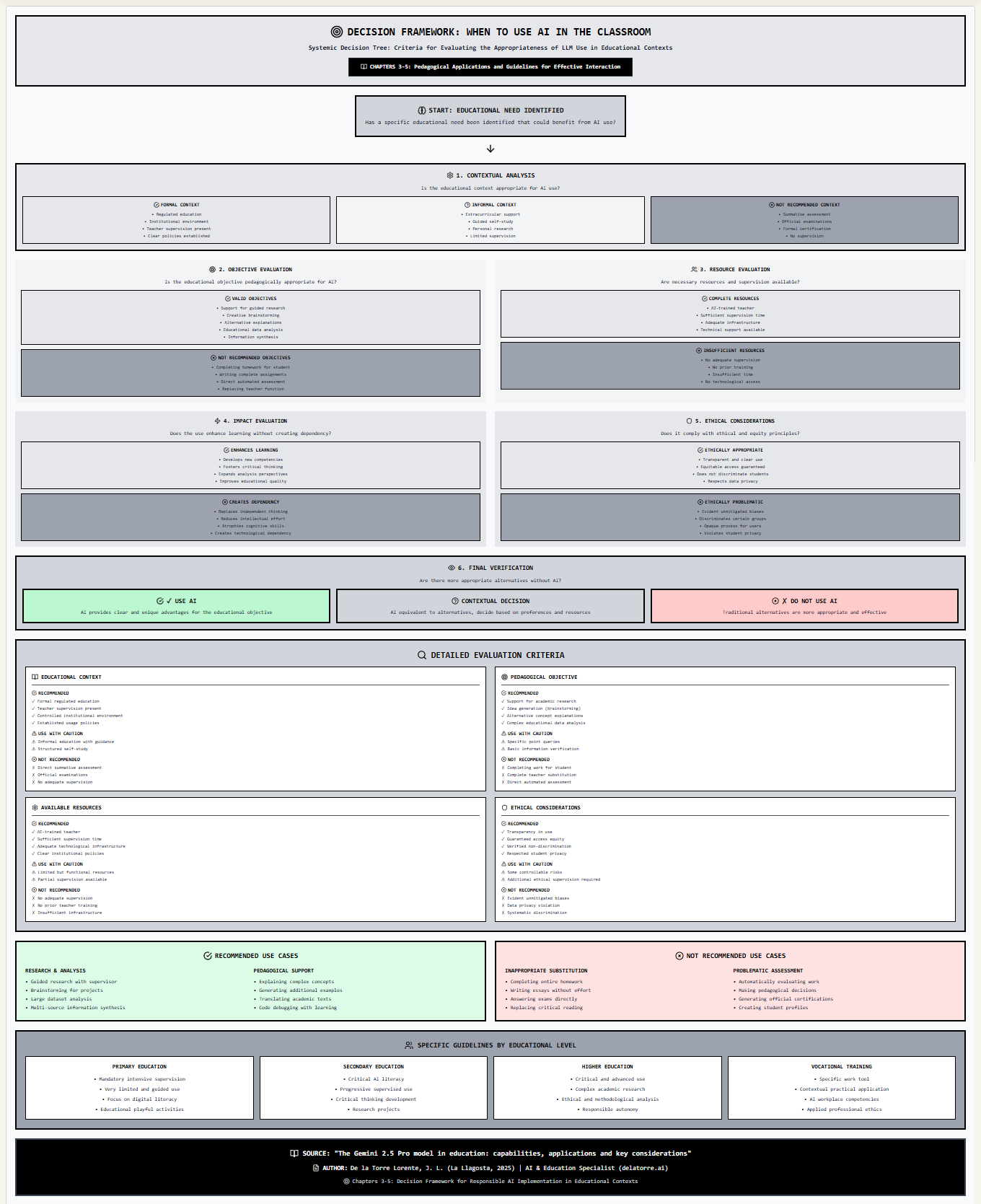

Integrating artificial intelligence (AI) into the classroom can unlock powerful benefits—enhanced research support, creative brainstorming, personalized feedback—but also carries risks like over-reliance, bias, and privacy concerns. This article explains a six-step AI Decision Framework designed to help educators decide when and how to apply AI tools effectively and ethically in educational settings.

1. Start: Identify the Educational Need

Before any AI implementation, confirm there is a specific educational need. This initial trigger ensures that AI is not used arbitrarily but addresses measurable learning objectives. Once you’ve identified a genuine need (e.g., data analysis support, concept explanation), proceed through the decision tree.

2. Step 1 – Contextual Analysis

Key Question: Is the educational context appropriate for AI?

-

Recommended (Formal Context):

-

Regulated environments (schools, universities)

-

Teacher supervision in place

-

Clear institutional policies on AI use

-

-

Acceptable with Caution (Informal Context):

-

Extracurricular tutoring or guided self-study

-

Research groups with limited oversight

-

-

Not Recommended:

-

High-stakes assessments (exams, certifications)

-

Situations with no supervision or policy

-

3. Step 2 – Pedagogical Objective Evaluation

Key Question: Does the learning objective align with AI capabilities?

-

Valid Objectives (Use AI):

-

Guided academic research

-

Creative idea generation (brainstorming)

-

Alternative explanations of complex concepts

-

Educational data analysis and information synthesis

-

-

Invalid Objectives (Avoid AI):

-

Completing student assignments entirely

-

Automated grading without human oversight

-

Full replacement of teacher functions

-

4. Step 3 – Resource and Supervision Check

Key Question: Are the necessary resources and supervision available?

-

Adequate Resources:

-

Teachers trained in AI integration

-

Sufficient time for monitoring AI interactions

-

Reliable technological infrastructure and technical support

-

-

Insufficient Resources:

-

Lack of training or knowledge about AI tools

-

Limited supervision time

-

Poor or absent access to required hardware/software

-

5. Step 4 – Impact and Dependency Assessment

Key Question: Will AI use enhance learning without fostering dependency?

-

Positive Impact:

-

Develops critical thinking and new competencies

-

Broadens analytical perspectives

-

Improves overall educational quality

-

-

Risk of Dependency:

-

Diminishes independent problem-solving skills

-

Reduces intellectual effort and creativity

-

Creates long-term reliance on AI technology

-

6. Step 5 – Ethical and Equity Considerations

Key Question: Does the AI application respect ethical principles and equity?

-

Ethically Appropriate:

-

Transparent AI processes and clear user guidance

-

Equal access for all learners

-

No discrimination or bias against any group

-

Strict protection of student data privacy

-

-

Ethically Problematic:

-

Unmitigated biases in AI outputs

-

Discriminatory or exclusionary behaviors

-

Opaque algorithms with no explainability

-

Privacy breaches or unauthorized data use

-

7. Step 6 – Final Verification of Alternatives

Key Question: Are there more appropriate non-AI alternatives?

-

Use AI: If AI delivers unique, measurable advantages.

-

Contextual Decision: If AI and traditional methods offer similar outcomes—choose based on available resources and preferences.

-

Do Not Use AI: If conventional approaches are clearly superior in effectiveness or safety.

How to Read the Framework Infographic

-

Follow the arrows from “START” through each decision node.

-

Interpret the colors:

-

Green shades = recommended

-

Gray shades = use with caution

-

Red shades = not recommended

-

-

Note the icons (gear for context, target for objectives, users for resources, zap for impact, shield for ethics, eye for final check) to quickly identify each section.

-

Consult the detailed criteria grid for specific indicators under each category (context, resources, objectives, ethics).

Conclusion

This AI Decision Framework provides a clear, structured approach to adopting AI in educational contexts. By systematically analyzing context, objectives, resources, impact, ethics, and alternatives, educators can ensure that AI tools enhance learning outcomes responsibly. Use this framework to make informed, transparent decisions and maximize the positive impact of AI in your classroom.